. BI Publisher 12c High Availability Architecture

My environment is following:

Node 1 | Node2 | |

Hostname | obiee1.vtgdb.com | obiee2.vtgdb.com |

IP | 192.168.2.131 | 192.168.2.132 |

Shared Directory | /u01/nfsshare | |

OS | Centos 6.6 (64 bit) | Centos 6.6 (64 bit) |

User OS | obiee | obiee |

Java Version | 1.8.0_60 | 1.8.0_60 |

FMW_HOME | /u01/obiee/fmw | /u01/obiee/fmw |

Domain Home | $FMW_HOME/user_projects/domains/bi | $FMW_HOME/user_projects/domains/bi |

II. Setting up shared directory

1. Introduction NFS:

Network File System (NFS) is a way to share files between machines on a network as if the files were located on the client's local hard drive. Linux can be both an NFS server and an NFS client, which means that it can export file systems to other systems and mount file systems exported from other machines.

My Environment is

Node 1 | Node2 | |

NFS | NFS Server | NFS Client |

Hostname | obiee1.vtgdb.com | obiee2.vtgdb.com |

IP | 192.168.2.131 | 192.168.2.132 |

Shared Directory | /u01/nfsshare | |

OS | Centos 6.6 (64 bit) | Centos 6.6 (64 bit) |

User OS | obiee | obiee |

2. Setting up NFS on Node 1 and Node 2

We need to install NFS packages on Node 1and Node2. We have 2 choice but we recommend choose choice 1:

Choice 1:

Node 1: [root@obiee1.vtgdb.com ~]# yum install nfs-utils nfs-utils-lib [root@obiee1.vtgdb.com ~]# yum install portmap Node 2: [root@obiee2.vtgdb.com ~]# yum install nfs-utils nfs-utils-lib [root@obiee2.vtgdb.com ~]# yum install portmap |

Choice 2:

Node 1: [root@obiee1.vtgdb.com Installs]$ ls nfs-utils-lib-1.1.5-11.el6.x86_64.rpm perl-base-5.22.1-2.mga6.x86_64.rpm portmap-6.0-4mdv2009.1.x86_64.rpm rpm-helper-0.23.0-1mdv2009.1.noarch.rpm [root@obiee1.vtgdb.com Installs]$ rpm -ivh nfs-utils-lib-1.1.5-11.el6.x86_64.rpm [root@obiee1.vtgdb.com Installs]$ rpm -ivh portmap-6.0-4mdv2009.1.x86_64.rpm Node 2: [root@obiee2.vtgdb.com Installs]$ ls nfs-utils-lib-1.1.5-11.el6.x86_64.rpm perl-base-5.22.1-2.mga6.x86_64.rpm portmap-6.0-4mdv2009.1.x86_64.rpm rpm-helper-0.23.0-1mdv2009.1.noarch.rpm [root@obiee2.vtgdb.com Installs]$ rpm -ivh nfs-utils-lib-1.1.5-11.el6.x86_64.rpm [root@obiee2.vtgdb.com Installs]$ rpm -ivh portmap-6.0-4mdv2009.1.x86_64.rpm |

Now we start the services on both Nodes.

[root@obiee1.vtgdb.com ~]# /etc/init.d/portmap start [root@obiee1.vtgdb.com ~]# /etc/init.d/nfs start [root@obiee1.vtgdb.com ~]# chkconfig --level 35 portmap on [root@obiee1.vtgdb.com ~]# chkconfig --level 35 nfs on |

3. Adding iptables rules to allow NFS communication

Add or uncomment following lines in /etc/sysconfig/nfs file on both Nodes:

LOCKD_TCPPORT=32803 LOCKD_UDPPORT=32769 MOUNTD_PORT=892 STATD_PORT=662 |

Restart NFSD daemon:

# /etc/init.d/nfs restart # /etc/init.d/nfslock restart |

Use fpcinfo command to confirm a validity of new ports setting:

[root@obiee2.vtgdb.com ~]$ rpcinfo -p localhost program vers proto port service 100000 4 tcp 111 portmapper 100000 3 tcp 111 portmapper 100000 2 tcp 111 portmapper 100000 4 udp 111 portmapper 100000 3 udp 111 portmapper 100000 2 udp 111 portmapper 100024 1 udp 45701 status 100024 1 tcp 33383 status 100011 1 udp 875 rquotad 100011 2 udp 875 rquotad 100011 1 tcp 875 rquotad 100011 2 tcp 875 rquotad 100005 1 udp 36947 mountd 100005 1 tcp 47741 mountd 100005 2 udp 32965 mountd 100005 2 tcp 36805 mountd 100005 3 udp 57132 mountd 100005 3 tcp 49678 mountd 100003 2 tcp 2049 nfs 100003 3 tcp 2049 nfs 100003 4 tcp 2049 nfs 100227 2 tcp 2049 nfs_acl 100227 3 tcp 2049 nfs_acl 100003 2 udp 2049 nfs 100003 3 udp 2049 nfs 100003 4 udp 2049 nfs 100227 2 udp 2049 nfs_acl 100227 3 udp 2049 nfs_acl 100021 1 udp 55069 nlockmgr 100021 3 udp 55069 nlockmgr 100021 4 udp 55069 nlockmgr 100021 1 tcp 37046 nlockmgr 100021 3 tcp 37046 nlockmgr 100021 4 tcp 37046 nlockmgr |

Save your current iptables rules into iptables-rules-orig.txt

# iptables-save > iptables-rules-orig.txt |

Create file called iptables-nfs-rules.txt with the following content:

*filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [2:200] :RH-Firewall-1-INPUT - [0:0] -A INPUT -j RH-Firewall-1-INPUT -A FORWARD -j RH-Firewall-1-INPUT -A RH-Firewall-1-INPUT -i lo -j ACCEPT -A RH-Firewall-1-INPUT -p icmp -m icmp --icmp-type any -j ACCEPT -A RH-Firewall-1-INPUT -p esp -j ACCEPT -A RH-Firewall-1-INPUT -p ah -j ACCEPT -A RH-Firewall-1-INPUT -d 224.0.0.251 -p udp -m udp --dport 5353 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m udp --dport 631 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m tcp --dport 631 -j ACCEPT -A RH-Firewall-1-INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 2049 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 22 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 111 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 111 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 2049 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 32769 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 32769 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 32803 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 32803 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 662 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 662 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m tcp --dport 892 -j ACCEPT -A RH-Firewall-1-INPUT -p udp -m state --state NEW -m udp --dport 892 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m udp --dport 9500 -j ACCEPT -A RH-Firewall-1-INPUT -p tcp -m state --state NEW -m udp --dport 9502 -j ACCEPT -A RH-Firewall-1-INPUT -j REJECT --reject-with icmp-host-prohibited COMMIT |

Apply new rules with iptables-restore, where the single argument will be an iptables-nfs-rules.txt file:

NOTE: this will create a new set of iptables rules. If you have already defined some iptables rules previously, you may want to edit iptables-rules-orig.txt and use it with iptables-restore command instead.

# iptables-restore iptables-nfs-rules.txt |

Save these new rules, so you do not have to apply new rules for nfs daemon next time you restart your server:

# service iptables save |

Now your server is ready to accept client nfs requests. Optionally, you may restart iptables rules / firewall with the following command:

# service iptables restart |

4. Configuring NFS Server on Node 1

Create shared directory

Node 1 |

[root@obiee1.vtgdb.com ~]# mkdir /u01/nfsshare |

Edit file /etc/exports

Node 1 |

[root@obiee1.vtgdb.com ~]$ vi /etc/exports /u01/nfsshare 192.168.2.132(rw,sync,no_root_squash) |

Note:

rw: This option allows the client server to both read and write access within the shared directory.

sync: Sync confirms requests to the shared directory only once the changes have been committed.

no_subtree_check: This option prevents the subtree checking. When a shared directory is the subdirectory of a larger file system, nfs performs scans of every directory above it, in order to verify its permissions and details. Disabling the subtree check may increase the reliability of NFS, but reduce security.

no_root_squash: This phrase allows root to connect to the designated directory.

5. Configuring NFS Client on Node 2

First, we need to find out that shares available on the remote server or NFS Server.

Node 2 |

[root@obiee2.vtgdb.com ~]# showmount -e 192.168..2.131 Export list for 192.168.2.131: /u01/nfsshare 192.168.2.131 |

Next, we mount the shared NFS directory

Node 2 |

[root@obiee2.vtgdb.com ~]# mount -t nfs 192.168.2.131:/u01/nfsshare /u01/nfsshare |

You can verify it following command

Node 2 |

[root@obiee2.vtgdb.com~]$ mount | grep nfs sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw) nfsd on /proc/fs/nfsd type nfsd (rw) 192.168.2.131:/u01/nfsshare on /u01/nfsshare type nfs (rw,vers=4,addr=192.168.2.131,clientaddr=192.168.2.132) |

to mount an NFS directory permanently on your system across the reboots, we need to add new line in “/etc/fstab“

Node 2 |

[root@obiee2.vtgdb.com ~]# vi /etc/fstab 192.168.2.131:/u01/nfsshare /u01/nfsshare nfs defaults 0 0 |

6. Grant privilege on the shared directory “/u01/nfsshare” to obiee user on 2 Nodes

Node 1: [root@obiee1.vtgdb.com ~]# chown –R obiee :obiee /u01/nfsshare Node 2 : [root@obiee2.vtgdb.com ~]# chown –R obiee :obiee /u01/nfsshare |

III. Installing BI Publisher 12c on Node1

1. Installing and configuring jdk

Download jdk1.8.0_60 from http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

Set JAVA_HOME environment

Node 1 |

[obiee@obiee1.vtgdb.com ~]$cd /u01/obiee/setup [obiee@obiee1.vtgdb.com setup]$ls jdk-8u60-linux-x64.gz [obiee@obiee1.vtgdb.com setup]$tar –xzvf jdk-8u60-linux-x64.gz [obiee@obiee1.vtgdb.com setup]$mv jdk-8u60-linux-x64 jdk [obiee@obiee1.vtgdb.com setup]$ls Jdk [obiee@obiee1.vtgdb.com setup]$cd ~ [obiee@obiee1.vtgdb.com setup]$vi .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH #JAVA_HOME export JAVA_HOME=/u01/obiee/setup/jdk export PATH=$JAVA_HOME/bin:$PATH |

Close and Save the file.

Node 1 |

[obiee@obiee1.vtgdb.com ~]$. .bash_profile [obiee@obiee1.vtgdb.com ~]$java –version java version "1.8.0_60" Java(TM) SE Runtime Environment (build 1.8.0_60-b27) Java HotSpot(TM) 64-Bit Server VM (build 25.60-b23, mixed mode) |

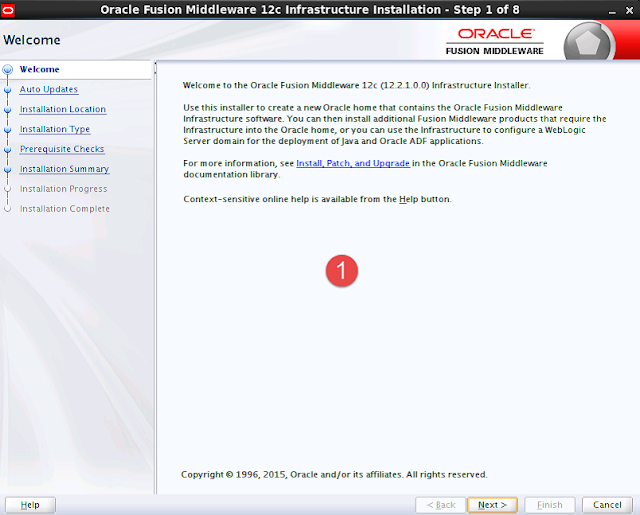

2. Installing Oracle Fusion Middleware 12c Infrastructure

Download the software from OTN

Run the software

Node 1 |

[obiee@obiee1.vtgdb.com setup]$ls fmw_12.2.1.0.0_infrastructure_Disk1_1of1.zip jdk [obiee@obiee1.vtgdb.com setup]$unzip fmw_12.2.1.0.0_infrastructure_Disk1_1of1.zip [obiee@obiee1.vtgdb.com setup]$java –jar fmw_12.2.1.0.0_infrastructure.jar |

Click Next

Click Next

Click Next

Click Install

Click Finish

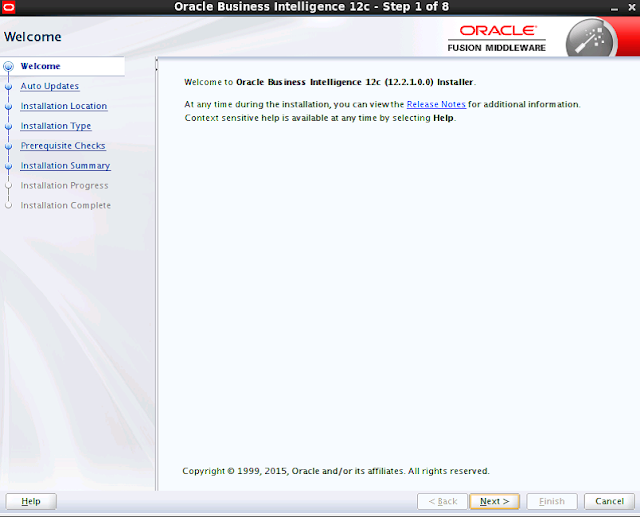

3. Installation of Oracle Business Intelligence 12c (12.2.1.0.0)

Run the installation

Node 1 |

[obiee@obiee1.vtgdb.com setup]$ls jdk fmw_12.2.1.0.0_infrastructure_Disk1_1of1.zip fmw_12.2.1.0.0_bi_linux64_Disk1_1of2.zip fmw_12.2.1.0.0_bi_linux64_Disk1_2of2.zip fmw_12.2.1.0.0_infrastructure.jar [obiee@obiee1.vtgdb.com setup]$unzip fmw_12.2.1.0.0_bi_linux64_Disk1_1of2.zip [obiee@obiee1.vtgdb.com setup]$unzip fmw_12.2.1.0.0_bi_linux64_Disk1_2of2.zip [obiee@obiee1.vtgdb.com setup]$./bi_platform-12.2.1.0.0_linux64.bin |

Click Next

Click Next

Click Next

Click Next

4. Configuring Business Intelligence

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/bi/bin [obiee@obiee1.vtgdb.com~]$./config.sh |

Click Next

Click Next

Click Next

Click Next

Click Next

Click Next.

After you install, you can access the BI analytics, BI Publisher, VA, Enterprise Manager, Administration Console .etc.

BI Publisher

Enterprise Manager

Administration Console :

VA :

Map Viewer :

IV. Installing BI Publisher 12c on Node 2

Create installation directory

Node 2 |

[obiee@obiee2.vtgdb.com ~] mkdir –p /u01/obiee/setup [obiee@obiee2.vtgdb.com ~]]mkdir –p /u01/obiee/fmw |

Transfer data from Node 1 to Node 2:

Node 2 |

[obiee@obiee2.vtgdb.com ~] cd /u01/obiee/setup [obiee@obiee2.vtgdb.com setup] scp –r 192.168.2.131:$PWD/jdk . [obiee@obiee2.vtgdb.com setup] cd /u01/obiee [obiee@obiee2.vtgdb.com ~] scp –r 192.168.2.131:$PWD/fmw . [obiee@obiee2.vtgdb.com ~] ls fmw setup [obiee@obiee2.vtgdb.com ~] cd setup [obiee@obiee2.vtgdb.com setup] ls jdk |

Move domain and application directory

Node 2 |

[[obiee@obiee2.vtgdb.com applications]$ pwd /u01/obiee/fmw/user_projects/applications [obiee@obiee2.vtgdb.com applications]$ ls bi [obiee@obiee2.vtgdb.com applications]$ mv bi bi_bak [obiee@obiee2.vtgdb.com applications]$ ls bi_bak [obiee@obiee2.vtgdb.com applications]$ cd /u01/obiee/fmw/user_projects/domains [obiee@obiee2.vtgdb.com domains]$ ls bi [obiee@obiee2.vtgdb.com domains]$ mv bi bi_bak [obiee@obiee2.vtgdb.com domains]$ ls bi_bak |

V. Horizontal Scaling BI Publisher 12c

Create 2 folders bidata and global_cache on NFS shared directory “/u01/nfsshare”

Node 1 |

[obiee@obiee1.vtgdb.com~]$mkdir –p /u01/nfsshare/{bidata,global_cache} |

Copy the contents of $DOMAIN_HOME/bidata folder to the NFS bidata folder. This folder is referred to as the ‘Singleton Data Directory’ in the documentation and includes the RPD and Catalogue.

Node 1 |

[obiee@obiee1.vtgdb.com~]$cp –R /u01/obiee/fmw/user_projects/domains/bi/bidata/* /u01/nfsshare/bidata |

Stop BI Publisher server

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domain/bi/bitools/bin [obiee@obiee1.vtgdb.com~]$./stop.sh |

Edit file bi-environment.xml

Node 1 |

[obiee@obiee1.vtgdb.com~]$.vi /u01/obiee/fmw/user_projects/domain/bi/config/fmwconfig/bienv/core/bi_environment.xml |

Start BI Publisher server

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domain/bi/bitools/bin [obiee@obiee1.vtgdb.com~]$./start.sh |

In Fusion Control (EM) go to the BI Instance page, Performance tab. Lock and Edit and change the Global cache path to the one in the NFS share

Hit the Apply button and then restart the BI components in the Availability tab.

Next, shutdown BI Publisher server on Node 1

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domain/bi/bitools/bin [obiee@obiee1.vtgdb.com~]$./stop.sh |

Then, run the following command to create the template file:

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domain/bi/bitools/bin [obiee@obiee1.vtgdb.com~]$./clone_bi_machine.sh 192.168.2.132 /u01/nfsshare/pack.jar |

On Node 2, run following command

Node 2 |

[obiee@obiee2.vtgdb.com~]$/u01/obiee/fmw/oracle_common/common/bin/unpack.sh -domain=/u01/obiee/fmw/user_projects/domains/bi -template=/u01/nfsshare/pack.jar |

Now, we start up the node manage on Node 2

Node 2 |

[obiee@obiee2.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domains/bi/bin/ [obiee@obiee2.vtgdb.com~]$./startNodeManager.sh & |

Finally, we restart BI Publisher server on Node 1:

Node 1 |

[obiee@obiee1.vtgdb.com~]$cd /u01/obiee/fmw/user_projects/domains/bi/bitools/bin [obiee@obiee1.vtgdb.com~]$./start.sh |

VI. Configuring Load Balancer for BI Publisher

VII. Appendix A: Reference Documents

No | Contents | Link |

1 | NFS( Network File System) | |

2 | ||

3 | Horizontal Scaling BI Publisher 12c | |

4 | ||

5 | ||

6 | ||

7 |

Download the guide from

https://drive.google.com/open?id=0B7a3DEnQ0-uzdFBIeHM3YWNveW8

https://drive.google.com/open?id=0B7a3DEnQ0-uzdFBIeHM3YWNveW8

0 komentar:

Post a Comment